This article was originally published on November 3, 2021.

For visual artists who aren’t able to use their hands, there are quite a few existing adaptive strategies and assistive technologies. When it comes to painting or drawing, one of the oldest and most common methods is actually no tech: an artist uses their mouth instead of their hand to hold a paintbrush, pencil or other drawing instrument. On the tech side, there have been some really interesting developments as well. Eye tracking technology, for example, allows people to interact with graphic user interfaces on computer screens and “click” and manipulate computer-based art tools using just their eyes. Both types of approaches have pros and cons. The analog hold-in-your-mouth method has a low barrier to entry and lets artists work with traditional art supplies. But holding a paintbrush in your mouth can prove ergonomically challenging and cause fatigue and cramping. Digital tools bypass some of those issues. But sophisticated eye tracking systems can be expensive, and artists are typically limited to digital art, or a printed version of something they created on a computer.

For their senior design project, UM-Dearborn students Dean Lawrence, Hannah Imboden, Hussein Chebli and Maya Kabbash set out to create a prototype technology that combines advantages of both approaches. Imboden, who’s both a painter and aspiring robotics engineer, was the one who pitched the initial idea of a “painting robot,” thinking that might look something like a robotic arm. But she and Lawrence, who’d taken a lot of classes in deep learning-based eye tracking and object recognition, got to talking and eventually came up with a modified approach: What if they used eye tracking to allow someone to create art on a computer screen, and then built a custom CNC machine capable of putting that art to canvas using traditional paints and brushes? If they could find a way to make that work, an artist could actually create a real paint-on-canvas style work, but with the ergonomic advantages of an eye tracking system.

If that sounds a little ambitious for a two-semester senior design project, it is. (Their faculty advisor, Professor Adnan Shaout, says it’s one of the most exciting he’s seen.) It’s even more impressive when you consider the typical budget for a senior design project is capped at $500. The students knew their CNC machine would eat up most of that, so they had to think of super low-cost solutions for the eye tracking part. “And that’s pretty tricky. I mean, you can get off-the-shelf eye tracking solutions, but they tend to be expensive. And if you want the really accurate stuff, they’re really expensive,” Lawrence explains. “So that’s when we started thinking, what if we could find some way to use a regular webcam? That’s something a lot of people already have in their laptop.” From a design perspective, that means it’s basically free.

Creating a webcam-based eye tracking system, however, poses some immediate engineering challenges. The root problem is that your average laptop or USB webcam is pretty low resolution, and eye tracking algorithms typically require a pretty sharp image to accurately register where the user’s gaze is focused on the screen. Otherwise, artists won’t be able to accurately “click” on-screen tools, and the whole activity of painting with your eyes becomes pretty frustrating.

You might think if you just made the on-screen buttons really big, that would give the eye tracking software a larger margin for error. But it turns out it’s a little more complicated than that. From her initial literature review, Imboden remembered learning that the way in which our eyes focus is actually a little quirky. “When your eyes are trying to focus on something, they don’t stay in one spot, they actually move around a little bit,” Imboden explains. “So if you give someone a really big thing to look at, their gaze might wander around to different parts of the object, including the edges. And then, if they’re looking at the edge, because of the low accuracy, the computer might register their gaze as outside the object.” Their solution: Create small on-screen buttons but with a large area of tolerance outside the actual button that’s still capable of registering a click. The smaller object helps the person focus their gaze, and the invisible active zone around the buttons takes care of the webcam’s resolution-based accuracy issues. Pretty ingenious.

To further help out the artist, the software only registers a click when the person holds their gaze for 3 seconds. “Another cool thing we did is that over the course of those 3 seconds, the target gets smaller and smaller until it registers the click,” Lawrence explains. The gradually shrinking object helps the person hold their focus, and gives them feedback that their eyes are leading them to the right action.

While the team wrote the code for all that — 90 percent of which Lawrence says was from scratch — Imboden led the effort to build the CNC machine which would paint what the artist was creating on the computer screen. One of their more interesting design choices is that the CNC machine puts the work on canvas one stroke at a time, as the artist finalizes their on-screen choices. Imboden and Lawrence say this makes the CNC feel much more like an extension of the artist’s body, not simply a fancy printer that replicates a digital work all at once when it’s finished.

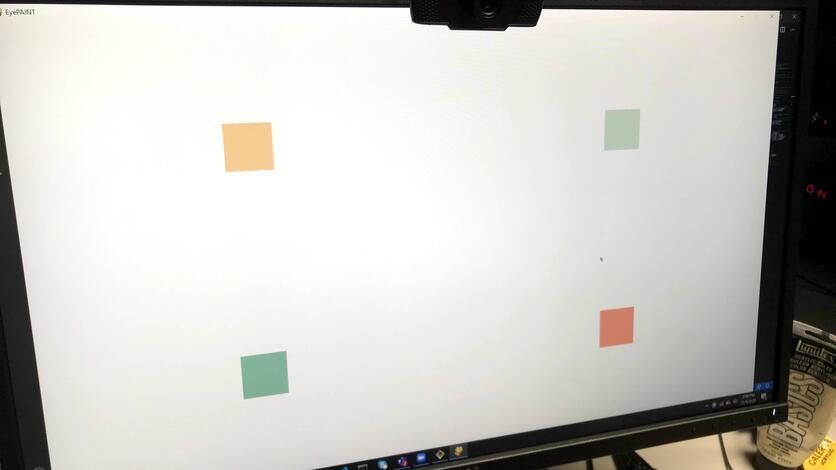

Aside from one hiccup with a faulty power supply, which dramatically fried a circuit board during one of their trials, their EyePaint system worked as planned, right out of the gate. For sure, Lawrence and Imboden say this prototype is fairly limited in scope: It gives an artist four color options and a few choices of shapes and objects that can be put to canvas. But they think it successfully demonstrates the potential of building eye tracking systems around lower-cost, readily available technologies. That could ultimately make assistive technologies like this more affordable.

Their advisor, Adnan Shaout, was so impressed with their work, he pushed them to write-up and submit their results to a peer-reviewed computer engineering journal. Their paper was accepted and recently published in the September issue of Jordan Journal of Electrical Engineering, which will enable others to build on their work. Imboden and Lawrence say the idea of somebody reading their article and trying to improve the approaches they developed is pretty exciting. And even more thrilling than publishing, Imboden says, is the fact that she now has something she always wanted: a robot that can paint. “I like painting and I like engineering stuff, and I’ve just always wanted to build a painting robot. So it’s pretty cool I get to keep this one,” she says. Lawrence held on to some mementos too. He had a couple of the initial test canvases professionally framed, reminders of an exceptional senior design project that really made a mark.

###

Story by Lou Blouin