This article was originally published on August 3, 2020.

Robotics is a field that’s constantly challenged by a central, inconvenient paradox: Many things we consider difficult to do as humans — say, playing chess — are now relatively easy for machines. Conversely, tasks we think of as easy — like picking up and handing a physical object to a friend — can be really tough for a robot.

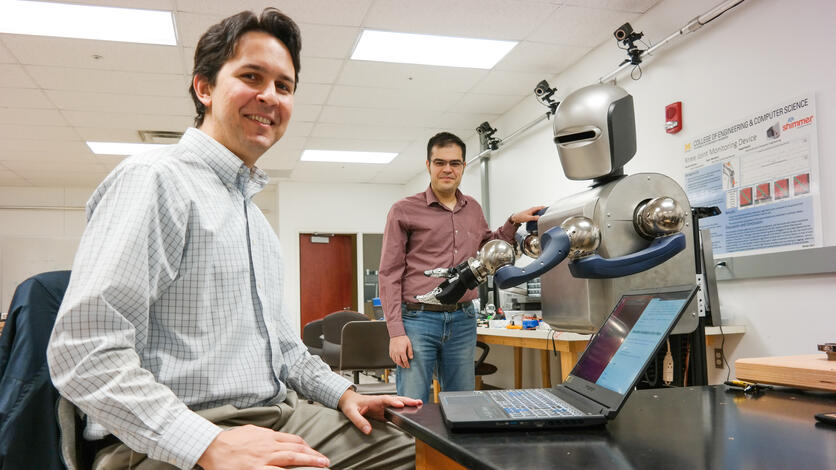

It reveals our nature as both cognitive and mechanical creatures, which are qualities we share with robots. The latter, in fact, are distinguished from other intelligent machines by their abilities to move and manipulate their environments, which is what makes programming robots such a daunting challenge for engineers. Humanoid robots — ones with humanlike anatomy such as arms, legs and hands — are some of the trickiest of all. Each moveable feature demands more intricate programming to control how an appendage interacts with its environment. “An autonomous vehicle, for example, has six degrees of freedom,” explains Assistant Professor Alireza Mohammadi, who’s part of a team working with a new NSF-funded humanoid robot at UM-Dearborn. “The humanoid robot we have in our lab has 36 degrees of freedom, so you can see it presents a much more difficult challenge.”

Their yet-to-be-named robot — which has a head, two arms, two hands, no legs but a moveable base, and looks a little like RoboCop — is giving Mohammadi, his colleague Associate Professor Samir Rawashdeh, and their students new avenues to study complex robotics problems. One of the areas Mohammadi works in includes a major wish-list item for manufacturers: Quick-pivoting robots that can be easily reprogrammed for new tasks. Currently, most industrial robots are only called on to do one task at a time. But in the emerging space of “flexible manufacturing,” where production lines are continually reconfigured to make a wide variety of things, robots that can be quickly taught new skills would be a big upgrade.

Mohammadi and Rawashdeh say achieving that objective involves not just thinking about a robot’s programming-based intelligence, but its anatomical intelligence. And that’s where humanoid robots have some interesting advantages. “In a sense, a hand has intelligence,” Mohammadi explains. “It’s a mechanical intelligence, because its specific structure, which has been optimized by nature, is sort of ready-made to grab and manipulate things. Mimicking that structure simplifies the computational process needed to control it, because its design or anatomy has already given you a head start.” For instance, he says just as a blind person can do many things by touch alone, a robot with a sophisticated sense of artificial touch may be able to do tasks without the need for its engineer to code complicated vision systems.

Even so, Rawashdeh says leveraging those natural design advantages can only take you so far. With today’s technology, it’s still a complicated task to write programming capable of moving a robotic appendage in ways we humans take for granted. When completing an everyday task — say, opening a refrigerator and grabbing a snack — we not only rely on unconscious knowledge of how to grasp things, we also know intuitively how to move our bodies out of the way of the swinging door. A robot has no such intuition — other than what its coding tells it to do. And without good coding, it’s very easy for a robot to look clumsy.

Moreover, Rawashdeh says this programming challenge is magnitudes more complicated when you put a robot in a dynamic, unstructured environment. “Robotic arms are very well understood in manufacturing settings — you program it once and it does the same thing a million times,” he explains. “But as soon as you want it to be where humans live, you can’t assume the floor will be clear. You can’t assume things are where they’re supposed to be. I suppose humans bring a certain amount of chaos with them.” And that makes things more difficult for the robots who might live among us someday.

Right now, Rawashdeh says robots capable of quickly making their own on-the-fly decisions about how to navigate that chaos still live only in our imaginations. But it’s one of the challenges he’s most interested in working on with his colleagues and students. One of his main areas of research focuses on the perception systems that could help robots move through unstructured human environments, like hospitals or elder care facilities. Using sensors like stereo cameras mounted in the head, the idea is the robot can “map” the objects in a room and then plan safe paths, adjusting to changes in the environment as they present themselves. It’s exciting research, but it’s still an enterprise where “baby steps” are big achievements. Even building the simulations, where they can safely preview new movements before trying them with the real robot, is a huge undertaking. In fact, they’ve spent much of their COVID-imposed time away from their lab working on exactly that. Their simulated testing environment now accounts for all of the robot's controllable parts. Rawashdeh says that should allow them to focus on “more exciting” things when they do return to the lab — such as grasping and manipulation skills — though they’ll start with near-term demonstrations like teaching the robot to pick up a drinking glass and safely set it back down.

“I think the fact that the expectations are so high is actually one of the huge challenges for researchers,” Rawashdeh says. “There’s a gap between what sci-fi kind of pushed on us for so many years and what’s actually possible. But I think people may be starting to get it. I think the challenges we’re seeing with autonomous cars may be helping the public understand that these are not easy problems to solve. It’s still very much a frontier, and where it all leads us, is something we don’t know yet.”

###

Rawashdeh and Mohammadi’s work is made possible by funding from the National Science Foundation and an internal grant from UM-Dearborn’s Institute for Advanced Vehicle Systems (IAVS). A number of other UM-Dearborn faculty contributed to the project, including Ya Sha (Alex) Yi, Yubao Chen, and former colleagues Yu Zheng and Stanley Baek.