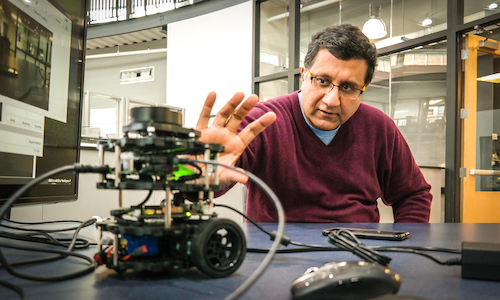

Associate Professor Sridhar Lakshmanan has taught the robotics vision course at UM-Dearborn plenty of times before. But this semester, he thought he’d spice up the class by adding an obvious missing ingredient: an actual robot. In this case, it’s an open-source research robot known as a “TurtleBot”—so-named because of its short, squat profile, and its top speed of roughly 1.5 miles per hour. They acquired a herd of 15 TurtleBots for the class with matching funds from the Department of Electrical and Computer Engineering and Henry W. Patton Center for Engineering Education and Practice.

To be fair, teaching the course without a robot wasn’t quite as counterintuitive as it sounds. Robots, after all, are fundamentally computers, and Lakshmanan said you can cover plenty about how they see by just focusing on the complicated math and coding at the core of robot vision.

That’s still a big part of the course. But now, all the students’ knowledge of algorithms and linear algebra will get put to the test in a final team project. The big exam will be making their TurtleBots autonomously traverse a mini obstacle course in the Institute for Advanced Vehicle Systems’ high bay lab—equipped only with the programming the student teams provide. As in, no remote controls allowed.

“When you or I are navigating on the road, for example, we use fiduciary markers,” Lakshmanan said. “We can recognize stop signs or lane markers. We use color to distinguish between a potential obstacle and a background. We don’t get confused by shadows or reflections. In a class like this, we can’t give the robot all that capability, but we can give it enough that it can navigate in a somewhat controlled environment.”

Getting a robot to do those kinds of things—things our human brains do unconsciously— involves using complex mathematics and computer algorithms to mimic those human abilities. For a robot to recognize a lane marker, for example, you have to write code that allows it to pick out the edges and boundaries within a two-dimensional image.

Students will also be tackling another fundamental challenge in robotics vision: the difference between two-dimensional and three-dimensional objects. Lakshmanan said if a robot had only an optical camera, it could easily confuse a shadow for an object and then wildly veer off course to avoid it.

The TurtleBots are therefore equipped with a second set of “eyes”—a LIDAR system, which functions similarly to radar, and uses infrared pulses to determine how far away something is and if it’s made of actual physical stuff. Even then, you still have to write code that lets the optical cameras and LIDAR systems talk with each other.

For students, the challenges they’ll face in the class will take them straight into the heart of the same core problems confronting engineers of driverless cars. Lakshmanan said almost all autonomous vehicles still use real-time perception—in conjunction with mapping and GPS technology—as their way of knowing what’s around them. As in humans, vision is a powerful unsung enabler of mobility.

“We have made many breakthroughs in the past decade, but there are still many problems that are in need of solutions,” Lakshmanan said. “The holy grail in vision is making a perception system that can see under all conditions—bright light, low light, complex shadows, reflections. And, of course, the algorithms must be robust enough to do it while travelling at relatively high speeds. That complexity is what makes vision so challenging.”

Luckily, for now, students will just have to achieve success at less than 2 miles per hour. Lakshmanan said even then, the obstacle course in the high bay lab will make for a challenging run—especially if passing clouds create some interesting lighting conditions or someone accidentally flips off the lights.

“If that happens,” Lakshmanan said, “then all bets are off.”