One of the early promises of artificial intelligence was that it could deliver ways of decision making free of the discrimination that seems endemic to the human world. But as machine learning processes have flooded many aspects of our lives, it’s becoming increasingly clear that machines can suffer from the same biases that we do.

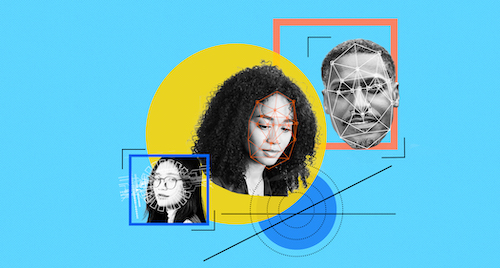

Dozens of high-profile examples from the past few years may sound like episode summaries from Black Mirror, but their consequences are real. Just last year, a Black Farmington Hills man was arrested and jailed after a police facial recognition algorithm wrongfully identified him as a man shoplifting on security footage — a known weakness such systems have in accurately identifying darker-skinned people. A few years ago, Amazon mostly abandoned a system it was using to screen job applicants when it discovered it was consistently favoring men over women. Similarly, in 2019, an ostensibly race-neutral algorithm widely used by hospitals and insurance companies was shown to be preferencing white people over Black people for certain types of care. And in one of the strangest examples yet, Microsoft’s experiment with a social chatbot designed to mimic the tone and personality of a quippy teenager lasted less than a day. After just 16 hours of interactions on Twitter, “Tay” was saying so many racist and offensive things, the company had to shut it down.

Understanding why this happens requires knowing a little bit about how machine learning models are built, says UM-Dearborn Assistant Professor Birhanu Eshete. “Suppose you wanted to teach a child the difference between a cat and a dog. You would probably start by showing them a bunch of pictures of both cats and dogs, and during that process, the child would absorb some features of cats and dogs. Then, hopefully, when you show them a picture they’ve never seen before, they can figure out if it’s a cat or a dog,” Eshete explains. “This is pretty much what we’re doing when we’re training a machine learning model: We show it a bunch of examples and it learns to classify things based on distinguishing features, and hopefully most of the time, it does a good job.”

This method of learning by example reveals one of the significant ways in which bias can infiltrate a machine learning model. For instance, if a facial recognition algorithm is trained mostly on images of lighter-skinned people, it may lack accuracy in identifying darker-skinned individuals. In much the same way, Amazon’s resume screening model proved to be biased toward men because it was trained to recognize keywords from resumes of its most successful current employees — who were disproportionately male.

For a while, this bias in the “training data” was thought to be the root of the problem. But DAIR Center Director and Associate Professor Marouane Kessentini says researchers have since identified all kinds of ways bias can creep into machine learning models. Tay, for example, wasn’t trained on racist language and therefore didn’t start out being a racist chatbot, Kessentini explains. “What happened in that case was the bot starting learning from its interactions with people on Twitter, and specifically from a community of users who were tweeting out racist comments. So this is an example of how the bias can come from the model’s interaction with humans. And when you consider how many technologies are continuously learning from their interactions with us, like Alexa, for example, you can see why this is a very significant issue.”

Other potential sources of bias are even subtler. For example, Eshete says models that seek to optimize processes inevitably involve a competition between different objectives, and the values that get assigned to those competing priorities can reflect biases from the human world. Similarly, all models ultimately have to be evaluated for effectiveness, which again, involves some human judgment calls. Kessentini says even programmers themselves can be a source of bias — which is one of the reasons why AI programs at universities, like the one at UM-Dearborn, are increasingly including training in the social sciences. In fact, Kessentini and Eshete are currently working on developing a brand new course on AI ethics to specifically prepare students for these kinds of challenges.

All of this begs the question, is a more ethical AI possible? Both Kessentini and Eshete think so, but they say it’s still early days when it comes to solutions. For example, basic statistical analysis can be of some help in “cleaning” training data that is often the initial source of bias. But Kessentini says because models are continuously learning, we will have to develop automated ways of detecting bias as the models evolve. “And this is a huge challenge because the volume of data is so enormous. In our work with eBay, for example, there are a billion data requests to their data banks every single day. This is simply a scale at which it’s impossible for humans to manually monitor the data for bias.”

A strange quality inherent to machine learning itself poses an even more fundamental challenge. A model might be able to sort cats and dogs, but how exactly it’s doing that is actually unknown. This “black box” nature of machine learning has led to a push for so-called explainable AI, an emerging discipline in which models could reveal to humans how exactly they are making their decisions. “This has huge implications for the real world,” Eshete says. “If your loan is denied or your application for college admission is rejected, and that decision was made by an algorithm and yet no one can explain to you why, I don't think we can call that an ethical system.”

While researchers work toward breakthroughs like explainable AI, Eshete and Kessentini say there are many common sense steps we can take today. Regular required auditing of companies and institutions that use AI in high-stakes applications — like loans, job applications and law enforcement — could keep algorithms from completely running amok. We can continue working on improving processes to systematically detect and mitigate bias, and engage in frank, fact-based conversations about bias in our human decision making. And we can make bigger investments in bias research — and in diversifying the AI field.

With or without these steps, AI will likely continue to extend its reach into our lives. Guiding its direction may be one area where our human intelligence still has no substitute.

###

Story by Lou Blouin. If you’re a member of the media and would like to interview Associate Professor Marouane Kessentini or Assistant Professor Birhanu Eshete on this topic, drop us a line at [email protected].